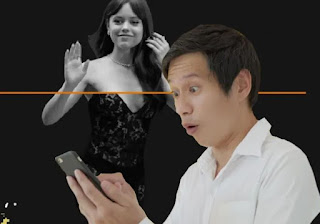

AI That Undresses Women’s Photos Surge In Popularity; Women Are Unsafe

The number of apps that employ artificial intelligence (AI) to strip individuals naked from pictures is rising. Women may be harmed by this. People all across the world are taking notice of the rise in popularity of applications and websites that use artificial intelligence (AI) to undress women in images.

What may go incorrectly? Are females secure? Are you able to share your pictures on Facebook? What might be the outcome? Does AI pose a privacy risk?

These apps alter people’s already-taken images and videos to make them appear nude without the subject’s permission. This poses a threat to women everywhere.

Many of the apps that undress people with artificial intelligence are exclusive to female users. They make the pictures suddenly naked. Usually, the photos are taken from social networking sites without the women’s knowledge. Their clothing is removed by the apps, leaving them looking entirely naked. There are situations when it’s difficult to distinguish between real and fraudulent photos.

The social media analytics company Graphika has examined 34 businesses that provide the service of undressing people’s photos. Non-consensual intimate imagery (NCII) was the term given to it.

A staggering 24 million unique people visited these websites in September alone. Creating an image of a female nude has become easier with the availability of open-source AI image diffusion models.

Comments

Post a Comment